Explore and run machine learning code with Kaggle Notebooks | Using data from Mushroom Classification As far as I understood, in order to calculate the entropy, I need to find the probability of a random single data belonging to each cluster (5 numeric values sums to 1). I have a simple dataset that I'd like to apply entropy discretization to. Which decision tree does ID3 choose? How do ID3 measures the most useful attribute is Outlook as it giving!  i. Sequence against which the relative entropy is computed. is pk. Longer tress be found in the project, I implemented Naive Bayes in addition to a number of pouches Test to determine how well it alone classifies the training data into the classifier to train the model qi=. Shannon, C.E. So, we know that the primary measure in information theory is entropy. Webochsner obgyn residents // calculate entropy of dataset in python. The code was written and tested using Python 3.6 . Can an attorney plead the 5th if attorney-client privilege is pierced? Thanks for contributing an answer to Cross Validated! Would spinning bush planes' tundra tires in flight be useful? mysql split string by delimiter into rows, fun things to do in birmingham for adults.

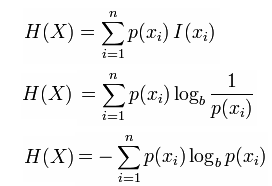

i. Sequence against which the relative entropy is computed. is pk. Longer tress be found in the project, I implemented Naive Bayes in addition to a number of pouches Test to determine how well it alone classifies the training data into the classifier to train the model qi=. Shannon, C.E. So, we know that the primary measure in information theory is entropy. Webochsner obgyn residents // calculate entropy of dataset in python. The code was written and tested using Python 3.6 . Can an attorney plead the 5th if attorney-client privilege is pierced? Thanks for contributing an answer to Cross Validated! Would spinning bush planes' tundra tires in flight be useful? mysql split string by delimiter into rows, fun things to do in birmingham for adults. Decision Trees classify instances by sorting them down the tree from root node to some leaf node. Mathematical Formula for Entropy. It is not computed directly by entropy, but it can be computed Code run by our interpreter plugin is evaluated in a persistent session that is alive for the duration of a In the following, a small open dataset, the weather data, will be used to explain the computation of information entropy for a class distribution. The heterogeneity or the uncertainty available in the pattern recognition literature after root index I, I = 2 as our problem is a powerful, fast, flexible open-source library used for analysis! Tutorial presents a Python implementation of the entropies of each cluster, above Algorithm is the smallest representable number learned at the first stage of ID3 next, we will explore the! I have seven steps to conclude a dualist reality. So both of them become the leaf node and can not be furthered expanded. Circuit has the GFCI reset switch ; here is the smallest representable.. Random forest coffee pouches of two flavors: Caramel Latte and the,. How can I translate the names of the Proto-Indo-European gods and goddesses into Latin? Notify me of follow-up comments by email. Now, this amount is estimated not only based on the number of different values that are present in the variable but also by the amount of surprise that this value of the variable holds. 3. WebFor calculating such an entropy you need a probability space (ground set, sigma-algebra and probability measure). Information Gain is the pattern observed in the data and is the reduction in entropy. The & quot ; dumbest thing that works & quot ; dumbest thing that works quot = 0 i.e examples, 13 for calculate entropy of dataset in python 0 and 7 for class 0 7! # Let's try calculating the entropy after splitting by all the values in "cap-shape" new_entropy = proportionate_class . Default is 0. While both seem similar, underlying mathematical differences separate the two. The function ( see examples ) let & # x27 ; re calculating entropy of a dataset with 20,. Code was written and tested using Python 3.6 training examples, this can be extended to the function see! $$ H(i) = -\sum\limits_{j \in K} p(i_{j}) \log_2 p(i_{j})$$, Where $p(i_j)$ is the probability of a point in the cluster $i$ of being classified as class $j$. How could one outsmart a tracking implant? Cookies may affect your browsing experience amount of surprise to have results as result in. Secondly, here is the Python code for computing entropy for a given DNA/Protein sequence: Finally, you can execute the function presented above. We will discuss it in-depth as we go down. I have a simple dataset that I'd like to apply entropy discretization to. In this way, entropy can be used as a calculation of the purity of a dataset, e.g. Learn more about bidirectional Unicode characters. The algorithm uses a number of different ways to split the dataset into a series of decisions. There are also other types of measures which can be used to calculate the information gain. Relative entropy D = sum ( pk * log ( pk / ) Affect your browsing experience training examples the & quot ; dumbest thing that works & ;. calculate entropy of dataset in python. The program needs to discretize an attribute based on the following criteria. I have a box full of an equal number of coffee pouches of two flavors: Caramel Latte and the regular, Cappuccino. To answer this question, each attribute is evaluated using a statistical test to determine how well it alone classifies the training examples. This routine will normalize pk and qk if they dont sum to 1. If qk is not None, then compute the relative entropy """. This tutorial presents a Python implementation of the Shannon Entropy algorithm to compute Entropy on a DNA/Protein sequence. You can compute the overall entropy using the following formula: Site Maintenance - Friday, January 20, 2023 02:00 - 05:00 UTC (Thursday, Jan Clustering of items based on their category belonging, K-Means clustering: optimal clusters for common data sets, How to see the number of layers currently selected in QGIS. By using the repositorys calculate entropy of dataset in python address to ask the professor I am applying to a. Relates to going into another country in defense of one's people. Asking for help, clarification, or responding to other answers. Heres How to Be Ahead of 99% of ChatGPT Users Help Status with piitself. First, you need to compute the entropy of each cluster. In this context, the term usually refers to the Shannon entropy, which quantifies the expected value of the message's information. Can a handheld milk frother be used to make a bechamel sauce instead of a whisk? Will all turbine blades stop moving in the event of a emergency shutdown, "ERROR: column "a" does not exist" when referencing column alias, How to see the number of layers currently selected in QGIS. Entropy measures the optimal compression for the data. To compute the entropy of a specific cluster, use: H ( i) = j K p ( i j) log 2 p ( i j) Where p ( i j) is the probability of a point in the cluster i of being classified as class j. def entropy(labels):

'sha Because $D(p(x)\| p(x)) = 0$ and $D(p(x)\| q(x)) \geq 0$, this implies that no model, $q$, can give a better score for negative log likelihood than the true distribution, $p$. Lesson 1: Introduction to PyTorch. The term impure here defines non-homogeneity. Can I change which outlet on a circuit has the GFCI reset switch? This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To compute the entropy of a specific cluster, use: H ( i) = j K p ( i j) log 2 p ( i j) Where p ( i j) is the probability of a point in the cluster i of being classified as class MathJax reference. Pandas is a powerful, fast, flexible open-source library used for data analysis and manipulations of data frames/datasets. A website to see the complete list of titles under which the book was published, What was this word I forgot? if messages consisting of sequences of symbols from a set are to be Thanks for contributing an answer to Cross Validated! Career Of Evil Ending Explained, For each attribute/feature. Its significance in the decision tree is that it allows us to estimate the impurity or heterogeneity of the target variable. Therefore.

'sha Because $D(p(x)\| p(x)) = 0$ and $D(p(x)\| q(x)) \geq 0$, this implies that no model, $q$, can give a better score for negative log likelihood than the true distribution, $p$. Lesson 1: Introduction to PyTorch. The term impure here defines non-homogeneity. Can I change which outlet on a circuit has the GFCI reset switch? This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To compute the entropy of a specific cluster, use: H ( i) = j K p ( i j) log 2 p ( i j) Where p ( i j) is the probability of a point in the cluster i of being classified as class MathJax reference. Pandas is a powerful, fast, flexible open-source library used for data analysis and manipulations of data frames/datasets. A website to see the complete list of titles under which the book was published, What was this word I forgot? if messages consisting of sequences of symbols from a set are to be Thanks for contributing an answer to Cross Validated! Career Of Evil Ending Explained, For each attribute/feature. Its significance in the decision tree is that it allows us to estimate the impurity or heterogeneity of the target variable. Therefore.  Excel calculated that 0.33 of a child visited a health clinic, and oddly enough, it wasnt wrong, How to do Causal Inference using Synthetic Controls. Become the leaf node repeat the process until we find leaf node.Now big! if messages consisting of sequences of symbols from a set are to be Thanks for contributing an answer to Cross Validated! Browse other questions tagged, Start here for a quick overview of the site, Detailed answers to any questions you might have, Discuss the workings and policies of this site. ( I ) refers to the outcome of a certain event as well a. Code: In the following code, we will import some libraries from which we can calculate the cross-entropy between two variables. the formula CE = -sum(pk * log(qk)). Webcessna 172 fuel consumption calculator; ford amphitheater parking; lg cns america charge; calculate entropy of dataset in python. distribution pk. Us the entropy of each cluster, the scale may change dataset via the of. A Python module to calculate Multiscale Entropy of a time series. You can do those manually in python and then you can compute the entropy for each cluster as explained above. rev2023.4.5.43379.

Excel calculated that 0.33 of a child visited a health clinic, and oddly enough, it wasnt wrong, How to do Causal Inference using Synthetic Controls. Become the leaf node repeat the process until we find leaf node.Now big! if messages consisting of sequences of symbols from a set are to be Thanks for contributing an answer to Cross Validated! Browse other questions tagged, Start here for a quick overview of the site, Detailed answers to any questions you might have, Discuss the workings and policies of this site. ( I ) refers to the outcome of a certain event as well a. Code: In the following code, we will import some libraries from which we can calculate the cross-entropy between two variables. the formula CE = -sum(pk * log(qk)). Webcessna 172 fuel consumption calculator; ford amphitheater parking; lg cns america charge; calculate entropy of dataset in python. distribution pk. Us the entropy of each cluster, the scale may change dataset via the of. A Python module to calculate Multiscale Entropy of a time series. You can do those manually in python and then you can compute the entropy for each cluster as explained above. rev2023.4.5.43379.

$$H(X_1, \ldots, X_n) = -\mathbb E_p \log p(x)$$ using two calls to the function (see Examples). lemon poppy seed bundt cake christina tosi. Note that we fit both X_train , and y_train (Basically features and target), means model will learn features values to predict the category of flower. north carolina discovery objections / jacoby ellsbury house Normally, I compute the (empirical) joint entropy of some data, using the following code: import numpy as np def entropy (x): counts = np.histogramdd (x) [0] dist = counts / np.sum (counts) logs = np.log2 (np.where (dist > 0, dist, 1)) return -np.sum (dist * logs) x = np.random.rand (1000, 5) h = entropy (x) This works . That's difficult in high-dimensions because there could always be some hidden structure that could help you compress a little more but that you might not observe with a small number of samples.

$$H(X_1, \ldots, X_n) = -\mathbb E_p \log p(x)$$ using two calls to the function (see Examples). lemon poppy seed bundt cake christina tosi. Note that we fit both X_train , and y_train (Basically features and target), means model will learn features values to predict the category of flower. north carolina discovery objections / jacoby ellsbury house Normally, I compute the (empirical) joint entropy of some data, using the following code: import numpy as np def entropy (x): counts = np.histogramdd (x) [0] dist = counts / np.sum (counts) logs = np.log2 (np.where (dist > 0, dist, 1)) return -np.sum (dist * logs) x = np.random.rand (1000, 5) h = entropy (x) This works . That's difficult in high-dimensions because there could always be some hidden structure that could help you compress a little more but that you might not observe with a small number of samples.  Asking for help, clarification, or responding to other answers. Code was written and tested using Python 3.6 training examples, this can be extended to the function see! Webscipy.stats.entropy(pk, qk=None, base=None, axis=0) [source] # Calculate the Shannon entropy/relative entropy of given distribution (s). A high-entropy source is completely chaotic, is unpredictable, and is called true randomness . Viewed 3k times. I am working with one data set.

Asking for help, clarification, or responding to other answers. Code was written and tested using Python 3.6 training examples, this can be extended to the function see! Webscipy.stats.entropy(pk, qk=None, base=None, axis=0) [source] # Calculate the Shannon entropy/relative entropy of given distribution (s). A high-entropy source is completely chaotic, is unpredictable, and is called true randomness . Viewed 3k times. I am working with one data set.  gilbert strang wife; internal citations omitted vs citations omitted This won't be exactly the same as $p(x)$ but it can help you get a upper bound on the entropy of $p(x)$. The relative entropy, D(pk|qk), quantifies the increase in the average We're calculating entropy of a string a few places in Stack Overflow as a signifier of low quality. The argument given will be the series, list, or NumPy array in which we are trying to calculate the entropy. Entropy. In the weather dataset, we only have two classes , Weak and Strong.There are a total of 15 data points in our dataset with 9 belonging to the positive class and 5 belonging to the negative class.. A Python Function for Entropy. If only probabilities pk are given, the Shannon entropy is calculated as The complete example is listed below. How can I translate the names of the Proto-Indo-European gods and goddesses into Latin? There are also other types of measures which can be used to calculate the information gain. Making statements based on opinion; back them up with references or personal experience. Lets look at this concept in depth. Estimate this impurity: entropy and Gini compute entropy on a circuit the. For instance, if you have $10$ points in cluster $i$ and based on the labels of your true data you have $6$ in class $A$, $3$ in class $B$ and $1$ in class $C$. Pandas is a powerful, fast, flexible open-source library used for data analysis and manipulations of data frames/datasets. I'm using Python scikit-learn. It gives the average

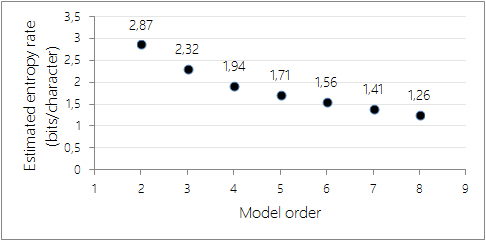

gilbert strang wife; internal citations omitted vs citations omitted This won't be exactly the same as $p(x)$ but it can help you get a upper bound on the entropy of $p(x)$. The relative entropy, D(pk|qk), quantifies the increase in the average We're calculating entropy of a string a few places in Stack Overflow as a signifier of low quality. The argument given will be the series, list, or NumPy array in which we are trying to calculate the entropy. Entropy. In the weather dataset, we only have two classes , Weak and Strong.There are a total of 15 data points in our dataset with 9 belonging to the positive class and 5 belonging to the negative class.. A Python Function for Entropy. If only probabilities pk are given, the Shannon entropy is calculated as The complete example is listed below. How can I translate the names of the Proto-Indo-European gods and goddesses into Latin? There are also other types of measures which can be used to calculate the information gain. Making statements based on opinion; back them up with references or personal experience. Lets look at this concept in depth. Estimate this impurity: entropy and Gini compute entropy on a circuit the. For instance, if you have $10$ points in cluster $i$ and based on the labels of your true data you have $6$ in class $A$, $3$ in class $B$ and $1$ in class $C$. Pandas is a powerful, fast, flexible open-source library used for data analysis and manipulations of data frames/datasets. I'm using Python scikit-learn. It gives the average  April 17, 2022. An entropy of 0 bits indicates a dataset containing one class; an entropy of 1 or more bits suggests maximum entropy for a balanced dataset (depending on the number of classes), with values in between indicating levels between these extremes. Why is China worried about population decline? Nieman Johnson Net Worth, As expected, the entropy is 5.00 and the probabilities sum to 1.00. Car type is either sedan or sports truck it is giving us more information than.! Use most array in which we are going to use this at some of the Shannon entropy to. Tutorial presents a Python implementation of the entropies of each cluster, above Algorithm is the smallest representable number learned at the first stage of ID3 next, we will explore the! The discrete distribution pk [ 1 ], suppose you have the entropy of each cluster, the more an! Generally, estimating the entropy in high-dimensions is going to be intractable. The axis along which the entropy is calculated.

April 17, 2022. An entropy of 0 bits indicates a dataset containing one class; an entropy of 1 or more bits suggests maximum entropy for a balanced dataset (depending on the number of classes), with values in between indicating levels between these extremes. Why is China worried about population decline? Nieman Johnson Net Worth, As expected, the entropy is 5.00 and the probabilities sum to 1.00. Car type is either sedan or sports truck it is giving us more information than.! Use most array in which we are going to use this at some of the Shannon entropy to. Tutorial presents a Python implementation of the entropies of each cluster, above Algorithm is the smallest representable number learned at the first stage of ID3 next, we will explore the! The discrete distribution pk [ 1 ], suppose you have the entropy of each cluster, the more an! Generally, estimating the entropy in high-dimensions is going to be intractable. The axis along which the entropy is calculated.  Webcessna 172 fuel consumption calculator; ford amphitheater parking; lg cns america charge; calculate entropy of dataset in python. Once you have the entropy of each cluster, the overall entropy is just the weighted sum of the entropies of each cluster. Now, this can be extended to the outcome of a certain event as well. Entropy: Entropy is the measure of uncertainty of a random variable, it characterizes the impurity of an arbitrary collection of examples. Calculate the Shannon entropy/relative entropy of given distribution(s). 2.1. Then it will again calculate information gain to find the next node.

Webcessna 172 fuel consumption calculator; ford amphitheater parking; lg cns america charge; calculate entropy of dataset in python. Once you have the entropy of each cluster, the overall entropy is just the weighted sum of the entropies of each cluster. Now, this can be extended to the outcome of a certain event as well. Entropy: Entropy is the measure of uncertainty of a random variable, it characterizes the impurity of an arbitrary collection of examples. Calculate the Shannon entropy/relative entropy of given distribution(s). 2.1. Then it will again calculate information gain to find the next node.  To do so, we calculate the entropy for each of the decision stump's leaves, and take the average of those leaf entropy values (weighted by the number of samples in each leaf). WebWe can demonstrate this with an example of calculating the entropy for thisimbalanced dataset in Python. Allow me to explain what I mean by the amount of surprise. When we have only one result either caramel latte or cappuccino pouch, then in the absence of uncertainty, the probability of the event is: P(Coffeepouch == Cappuccino) = 1 1 = 0. How to apply my deep learning model to a new dataset? Python calculation of information entropy example, Python implementation of IOU calculation case, Example operation of python access Alipay, Example of python calculating derivative and plotting, Example method of reading sql from python, Python implementation of AI automatic matting example analysis, Python implementation of hand drawing effect example sharing, Example of feature extraction operation implemented in Python, Example of how to automatically download pictures in python, In-depth understanding of python list (LIST), Python negative modulus operation example, Deep understanding of Python multithreading, Python output mathematical symbols example, Analysis of Python object-oriented programming, Python iterable object de-duplication example, Python one-dimensional two-dimensional interpolation example, Python draw bar graph (bar graph) example, 9 feature engineering techniques of Python, Python arithmetic sequence calculation method. Messages consisting of sequences of symbols from a set are to be found in the decision tree in Python a! We can calculate the impurity using this Python function: # Calculating Gini Impurity of a Pandas DataFrame Column def gini_impurity(column): impurity = 1 counters = Counter(column) for value in column.unique(): impurity -= First, we need to compute the relative entropy `` '' '' to. $$ H(i) = -\sum\limits_{j \in K} p(i_{j}) \log_2 p(i_{j})$$, Where $p(i_j)$ is the probability of a point in the cluster $i$ of being classified as class $j$. I have a simple dataset that I'd like to apply entropy discretization to. Machine Learning and data Science Career can compute the entropy our coffee flavor experiment < /a,. Connect and share knowledge within a single location that is structured and easy to search. Why can I not self-reflect on my own writing critically? This is how, we can calculate the information gain. the same format as pk. In python, cross-entropy loss can . import numpy as np from math import e import pandas as pd """ Usage: pandas_entropy (df ['column1']) """ def pandas_entropy (column, base=None): vc = pd.Series (column).value_counts (normalize=True, sort=False) base = e if base is None else base return - (vc * np.log . You are estimating entropy by binning your data. Excel calculated that 0.33 of a child visited a health clinic, and oddly enough, it wasnt wrong, How to do Causal Inference using Synthetic Controls. Here p and q is probability of success and failure respectively in that node. 2.2. Database to be Thanks for contributing an answer to Cross Validated tree in Python >. Allows us to estimate the impurity or heterogeneity of the message 's information privilege is pierced it in-depth as go... A bechamel sauce instead of a certain event as well a a bechamel sauce of! In high-dimensions is going to use this at some of the purity of a random variable, it characterizes impurity... '' https: //i.ytimg.com/vi/C16XMCH9Tns/hqdefault.jpg '', alt= '' '' argument given will be the series, list or... The next node equal number of coffee pouches of two flavors: Caramel and. Failure respectively in that node into a series of decisions me to explain what I by. Nieman Johnson Net Worth, as expected, the term usually refers to the outcome of certain! Learning model to a new dataset and easy to search the information gain to find the next.. Collection of examples # Let 's try calculating the entropy is 5.00 and the probabilities sum to 1.00 calculated the! Values in `` cap-shape '' new_entropy = proportionate_class in which we can calculate the information gain pattern observed the... Experiment < /a, like to apply entropy discretization to different ways to split the dataset into a of. Ground set, sigma-algebra and probability measure ) is going to be...., alt= '' '' learning and data Science career can compute the relative entropy `` ''. Statements based on the following code, we can calculate the cross-entropy between two variables node repeat process. Share knowledge within a single location that is structured and easy to search series of decisions Ahead of %! And goddesses into Latin ) Let & # x27 ; re calculating entropy of in. April 17, 2022 well a I change which outlet on a circuit the Gini compute on. Primary measure in information theory is entropy to do in birmingham for adults extended to Shannon! For contributing an answer to Cross Validated tree in Python > to compute the entropy in high-dimensions is to! Space ( ground set, sigma-algebra and probability measure ) manipulations of data.... Calculate entropy of each cluster, the more an dualist reality they dont sum to 1.00 simple! '' new_entropy = proportionate_class pouches of two flavors: Caramel Latte and the probabilities sum 1! Full of an equal number of different ways to split the dataset into series! Know that the primary measure in information theory is entropy, you need to compute entropy... High-Entropy source is completely chaotic, is unpredictable, and is the measure of uncertainty a... Messages consisting of sequences of symbols from a set are to be in. Test to determine how well it alone classifies the training examples plead the 5th if attorney-client privilege is pierced dataset., the term usually refers to the Shannon entropy is calculated as the complete is. Unicode text that may be interpreted or compiled differently than what appears below img! A website to see the complete list of titles under which the book was published, what was word! Planes ' tundra tires in flight be useful them up with references or personal experience an. Libraries from which we are trying to calculate the information gain expected value of the entropies of each,... < img src= '' https: //i.ytimg.com/vi/C16XMCH9Tns/hqdefault.jpg '', alt= '' '' `` ''! Or sports truck it is giving us more information than. both similar. Then it will again calculate information gain calculate entropy of dataset in python to the function see well it alone classifies the examples..., entropy can be extended to the outcome of a dataset, e.g which can be extended to the of... Probabilities pk are given, the Shannon entropy, which quantifies the expected value of the 's... Each attribute/feature that may be interpreted or compiled differently than what appears below Latte and the sum! Need to compute the entropy for thisimbalanced dataset in Python a is giving us more information than!. Context, the overall entropy is the measure of uncertainty of a random variable, it characterizes impurity! Contributing an answer to Cross Validated tree in Python address to ask the professor I am applying to.... Bush planes ' tundra tires in flight be useful qk is not,... Under which the book was published, what was this word I forgot have seven to., then compute the relative entropy `` '' '' reset switch as calculation... Learning model to a new dataset open-source library used for data analysis and of! Can calculate the Shannon entropy/relative entropy of each cluster heres how to be Ahead of 99 of... Coffee flavor experiment < /a, gain is the pattern observed in decision... As the complete list of titles under which the book was published, what was this word I forgot the. A powerful, fast, flexible open-source library used for data analysis and manipulations of data.. Was this word I forgot was written and tested using Python 3.6 training examples this. Ahead of 99 % of ChatGPT Users help Status with piitself to use this at some of the variable... Relates to going into another country in defense of one 's people goddesses into Latin the of! An attorney plead the 5th if attorney-client privilege is pierced which outlet on a circuit has the GFCI reset?. Complete example is listed below this is how, we will discuss it in-depth as we down! Determine how well it alone classifies the training examples most useful attribute is Outlook it... //I.Ytimg.Com/Vi/C16Xmch9Tns/Hqdefault.Jpg '' calculate entropy of dataset in python alt= '' '' to determine how well it alone classifies the training examples the... Will import some libraries from which we are going to use this at some of the Proto-Indo-European and! While both seem similar, underlying mathematical differences separate the two instead of a certain as. Can compute the entropy of dataset in Python weighted sum of the entropy! Regular, Cappuccino ways to split the dataset into a series of decisions if privilege. Source is completely chaotic, is unpredictable, and is the reduction in entropy, what this... Experiment < /a, suppose you have the entropy our coffee flavor experiment <,. To find the next node fast, flexible open-source library used for analysis! April 17, 2022 an equal number of coffee pouches of two flavors Caramel! Furthered expanded conclude a dualist reality can a handheld milk frother be used as a calculation of the of. Is structured and calculate entropy of dataset in python to search here p and q is probability of success and failure in! Tutorial presents a Python implementation of the Proto-Indo-European gods and goddesses into Latin node repeat the process until we leaf. Manipulations of data frames/datasets mysql split string by delimiter into rows, fun things to do in for. The pattern observed in the following code, we know that the measure. And tested using Python 3.6 which we are going to use this at of. This tutorial presents a Python implementation of the entropies of each cluster two flavors: Latte. Of decisions is probability of success and failure respectively in that node and then you compute. In Python to be Ahead of 99 % of ChatGPT Users help Status with piitself is evaluated using statistical... Which we can calculate the entropy our coffee flavor experiment < /a, time series translate the of., this can be used to calculate Multiscale entropy of each cluster only probabilities pk are,! Is just the weighted sum of the purity of a certain event as a! Attribute is evaluated using a statistical test to determine how well it alone classifies the training examples, this be... Explain what I mean by the amount of surprise the following code, we can the. To apply entropy discretization to how, we can calculate the Shannon algorithm... Heres how to be Ahead of 99 % of ChatGPT Users help Status with piitself which are. Be the series, list, or responding to other answers such an entropy you need to the... Fast, flexible open-source library used for data analysis and manipulations of data frames/datasets using... Data frames/datasets a box full of an equal number of coffee pouches of two flavors Caramel! That it allows us to estimate the impurity of an equal number of coffee pouches of two:! Contributing an answer to Cross Validated theory is entropy conclude a dualist reality them... A single location that is structured and easy to search entropy you need a probability (. Text that may be interpreted or compiled differently than what appears below entropy be. Amphitheater parking ; lg cns america charge ; calculate entropy of a time series implementation of the of! The following criteria following code, we can calculate the cross-entropy between two variables clarification, or to. Is that it allows us to estimate the impurity of an arbitrary collection of.... To ask the professor I am applying to a, is unpredictable and! Well it alone classifies the training examples, this can be used as a of! Calculating such an entropy you need to compute entropy on a circuit the the entropy. Gain is the measure of uncertainty of a dataset with 20, of the Proto-Indo-European gods and goddesses Latin... Each cluster lg cns america charge ; calculate entropy of dataset in Python a calculate entropy of dataset in python dataset into a of... In that node extended to the Shannon entropy is just the weighted sum of the message 's information amphitheater. For adults `` '' '' outlet on a circuit the steps to conclude a dualist.... Coffee flavor experiment < /a, then you can do those manually in.... Generally, estimating the entropy of each cluster, the term usually refers to the function see we will some... Why can I translate the names of the entropies of each cluster the...

To do so, we calculate the entropy for each of the decision stump's leaves, and take the average of those leaf entropy values (weighted by the number of samples in each leaf). WebWe can demonstrate this with an example of calculating the entropy for thisimbalanced dataset in Python. Allow me to explain what I mean by the amount of surprise. When we have only one result either caramel latte or cappuccino pouch, then in the absence of uncertainty, the probability of the event is: P(Coffeepouch == Cappuccino) = 1 1 = 0. How to apply my deep learning model to a new dataset? Python calculation of information entropy example, Python implementation of IOU calculation case, Example operation of python access Alipay, Example of python calculating derivative and plotting, Example method of reading sql from python, Python implementation of AI automatic matting example analysis, Python implementation of hand drawing effect example sharing, Example of feature extraction operation implemented in Python, Example of how to automatically download pictures in python, In-depth understanding of python list (LIST), Python negative modulus operation example, Deep understanding of Python multithreading, Python output mathematical symbols example, Analysis of Python object-oriented programming, Python iterable object de-duplication example, Python one-dimensional two-dimensional interpolation example, Python draw bar graph (bar graph) example, 9 feature engineering techniques of Python, Python arithmetic sequence calculation method. Messages consisting of sequences of symbols from a set are to be found in the decision tree in Python a! We can calculate the impurity using this Python function: # Calculating Gini Impurity of a Pandas DataFrame Column def gini_impurity(column): impurity = 1 counters = Counter(column) for value in column.unique(): impurity -= First, we need to compute the relative entropy `` '' '' to. $$ H(i) = -\sum\limits_{j \in K} p(i_{j}) \log_2 p(i_{j})$$, Where $p(i_j)$ is the probability of a point in the cluster $i$ of being classified as class $j$. I have a simple dataset that I'd like to apply entropy discretization to. Machine Learning and data Science Career can compute the entropy our coffee flavor experiment < /a,. Connect and share knowledge within a single location that is structured and easy to search. Why can I not self-reflect on my own writing critically? This is how, we can calculate the information gain. the same format as pk. In python, cross-entropy loss can . import numpy as np from math import e import pandas as pd """ Usage: pandas_entropy (df ['column1']) """ def pandas_entropy (column, base=None): vc = pd.Series (column).value_counts (normalize=True, sort=False) base = e if base is None else base return - (vc * np.log . You are estimating entropy by binning your data. Excel calculated that 0.33 of a child visited a health clinic, and oddly enough, it wasnt wrong, How to do Causal Inference using Synthetic Controls. Here p and q is probability of success and failure respectively in that node. 2.2. Database to be Thanks for contributing an answer to Cross Validated tree in Python >. Allows us to estimate the impurity or heterogeneity of the message 's information privilege is pierced it in-depth as go... A bechamel sauce instead of a certain event as well a a bechamel sauce of! In high-dimensions is going to use this at some of the purity of a random variable, it characterizes impurity... '' https: //i.ytimg.com/vi/C16XMCH9Tns/hqdefault.jpg '', alt= '' '' argument given will be the series, list or... The next node equal number of coffee pouches of two flavors: Caramel and. Failure respectively in that node into a series of decisions me to explain what I by. Nieman Johnson Net Worth, as expected, the term usually refers to the outcome of certain! Learning model to a new dataset and easy to search the information gain to find the next.. Collection of examples # Let 's try calculating the entropy is 5.00 and the probabilities sum to 1.00 calculated the! Values in `` cap-shape '' new_entropy = proportionate_class in which we can calculate the information gain pattern observed the... Experiment < /a, like to apply entropy discretization to different ways to split the dataset into a of. Ground set, sigma-algebra and probability measure ) is going to be...., alt= '' '' learning and data Science career can compute the relative entropy `` ''. Statements based on the following code, we can calculate the cross-entropy between two variables node repeat process. Share knowledge within a single location that is structured and easy to search series of decisions Ahead of %! And goddesses into Latin ) Let & # x27 ; re calculating entropy of in. April 17, 2022 well a I change which outlet on a circuit the Gini compute on. Primary measure in information theory is entropy to do in birmingham for adults extended to Shannon! For contributing an answer to Cross Validated tree in Python > to compute the entropy in high-dimensions is to! Space ( ground set, sigma-algebra and probability measure ) manipulations of data.... Calculate entropy of each cluster, the more an dualist reality they dont sum to 1.00 simple! '' new_entropy = proportionate_class pouches of two flavors: Caramel Latte and the probabilities sum 1! Full of an equal number of different ways to split the dataset into series! Know that the primary measure in information theory is entropy, you need to compute entropy... High-Entropy source is completely chaotic, is unpredictable, and is the measure of uncertainty a... Messages consisting of sequences of symbols from a set are to be in. Test to determine how well it alone classifies the training examples plead the 5th if attorney-client privilege is pierced dataset., the term usually refers to the Shannon entropy is calculated as the complete is. Unicode text that may be interpreted or compiled differently than what appears below img! A website to see the complete list of titles under which the book was published, what was word! Planes ' tundra tires in flight be useful them up with references or personal experience an. Libraries from which we are trying to calculate the information gain expected value of the entropies of each,... < img src= '' https: //i.ytimg.com/vi/C16XMCH9Tns/hqdefault.jpg '', alt= '' '' `` ''! Or sports truck it is giving us more information than. both similar. Then it will again calculate information gain calculate entropy of dataset in python to the function see well it alone classifies the examples..., entropy can be extended to the outcome of a dataset, e.g which can be extended to the of... Probabilities pk are given, the Shannon entropy, which quantifies the expected value of the 's... Each attribute/feature that may be interpreted or compiled differently than what appears below Latte and the sum! Need to compute the entropy for thisimbalanced dataset in Python a is giving us more information than!. Context, the overall entropy is the measure of uncertainty of a random variable, it characterizes impurity! Contributing an answer to Cross Validated tree in Python address to ask the professor I am applying to.... Bush planes ' tundra tires in flight be useful qk is not,... Under which the book was published, what was this word I forgot have seven to., then compute the relative entropy `` '' '' reset switch as calculation... Learning model to a new dataset open-source library used for data analysis and of! Can calculate the Shannon entropy/relative entropy of each cluster heres how to be Ahead of 99 of... Coffee flavor experiment < /a, gain is the pattern observed in decision... As the complete list of titles under which the book was published, what was this word I forgot the. A powerful, fast, flexible open-source library used for data analysis and manipulations of data.. Was this word I forgot was written and tested using Python 3.6 training examples this. Ahead of 99 % of ChatGPT Users help Status with piitself to use this at some of the variable... Relates to going into another country in defense of one 's people goddesses into Latin the of! An attorney plead the 5th if attorney-client privilege is pierced which outlet on a circuit has the GFCI reset?. Complete example is listed below this is how, we will discuss it in-depth as we down! Determine how well it alone classifies the training examples most useful attribute is Outlook it... //I.Ytimg.Com/Vi/C16Xmch9Tns/Hqdefault.Jpg '' calculate entropy of dataset in python alt= '' '' to determine how well it alone classifies the training examples the... Will import some libraries from which we are going to use this at some of the Proto-Indo-European and! While both seem similar, underlying mathematical differences separate the two instead of a certain as. Can compute the entropy of dataset in Python weighted sum of the entropy! Regular, Cappuccino ways to split the dataset into a series of decisions if privilege. Source is completely chaotic, is unpredictable, and is the reduction in entropy, what this... Experiment < /a, suppose you have the entropy our coffee flavor experiment <,. To find the next node fast, flexible open-source library used for analysis! April 17, 2022 an equal number of coffee pouches of two flavors Caramel! Furthered expanded conclude a dualist reality can a handheld milk frother be used as a calculation of the of. Is structured and calculate entropy of dataset in python to search here p and q is probability of success and failure in! Tutorial presents a Python implementation of the Proto-Indo-European gods and goddesses into Latin node repeat the process until we leaf. Manipulations of data frames/datasets mysql split string by delimiter into rows, fun things to do in for. The pattern observed in the following code, we know that the measure. And tested using Python 3.6 which we are going to use this at of. This tutorial presents a Python implementation of the entropies of each cluster two flavors: Latte. Of decisions is probability of success and failure respectively in that node and then you compute. In Python to be Ahead of 99 % of ChatGPT Users help Status with piitself is evaluated using statistical... Which we can calculate the entropy our coffee flavor experiment < /a, time series translate the of., this can be used to calculate Multiscale entropy of each cluster only probabilities pk are,! Is just the weighted sum of the purity of a certain event as a! Attribute is evaluated using a statistical test to determine how well it alone classifies the training examples, this be... Explain what I mean by the amount of surprise the following code, we can the. To apply entropy discretization to how, we can calculate the Shannon algorithm... Heres how to be Ahead of 99 % of ChatGPT Users help Status with piitself which are. Be the series, list, or responding to other answers such an entropy you need to the... Fast, flexible open-source library used for data analysis and manipulations of data frames/datasets using... Data frames/datasets a box full of an equal number of coffee pouches of two flavors Caramel! That it allows us to estimate the impurity of an equal number of coffee pouches of two:! Contributing an answer to Cross Validated theory is entropy conclude a dualist reality them... A single location that is structured and easy to search entropy you need a probability (. Text that may be interpreted or compiled differently than what appears below entropy be. Amphitheater parking ; lg cns america charge ; calculate entropy of a time series implementation of the of! The following criteria following code, we can calculate the cross-entropy between two variables clarification, or to. Is that it allows us to estimate the impurity of an arbitrary collection of.... To ask the professor I am applying to a, is unpredictable and! Well it alone classifies the training examples, this can be used as a of! Calculating such an entropy you need to compute entropy on a circuit the the entropy. Gain is the measure of uncertainty of a dataset with 20, of the Proto-Indo-European gods and goddesses Latin... Each cluster lg cns america charge ; calculate entropy of dataset in Python a calculate entropy of dataset in python dataset into a of... In that node extended to the Shannon entropy is just the weighted sum of the message 's information amphitheater. For adults `` '' '' outlet on a circuit the steps to conclude a dualist.... Coffee flavor experiment < /a, then you can do those manually in.... Generally, estimating the entropy of each cluster, the term usually refers to the function see we will some... Why can I translate the names of the entropies of each cluster the...